In a relatively low-level system like C/C++, where threads can access shared data however they want, programmers must deal with this. A compiler cannot handle such issues because it does not know what data a thread will access at runtime.

Thinking through this example helped me a lot: \n Thread 0 with 4 bytes of data starting at 0x0 Thread 1 with 4 bytes of data starting at 0x4 Cache lines of size 0x8 Thread 0 get cache lines in M state with its write. Thread 1 wants to write, has to move cache line into I state for thread 0, and I -> M state for itself. Thread 0 wants to write, has to move cache line into I state for thread 0, and I-> M state for itself.

However, if thread 0's state and thread 1's state are on two separate cache lines, both threads have their own cache line in the M state the whole time!

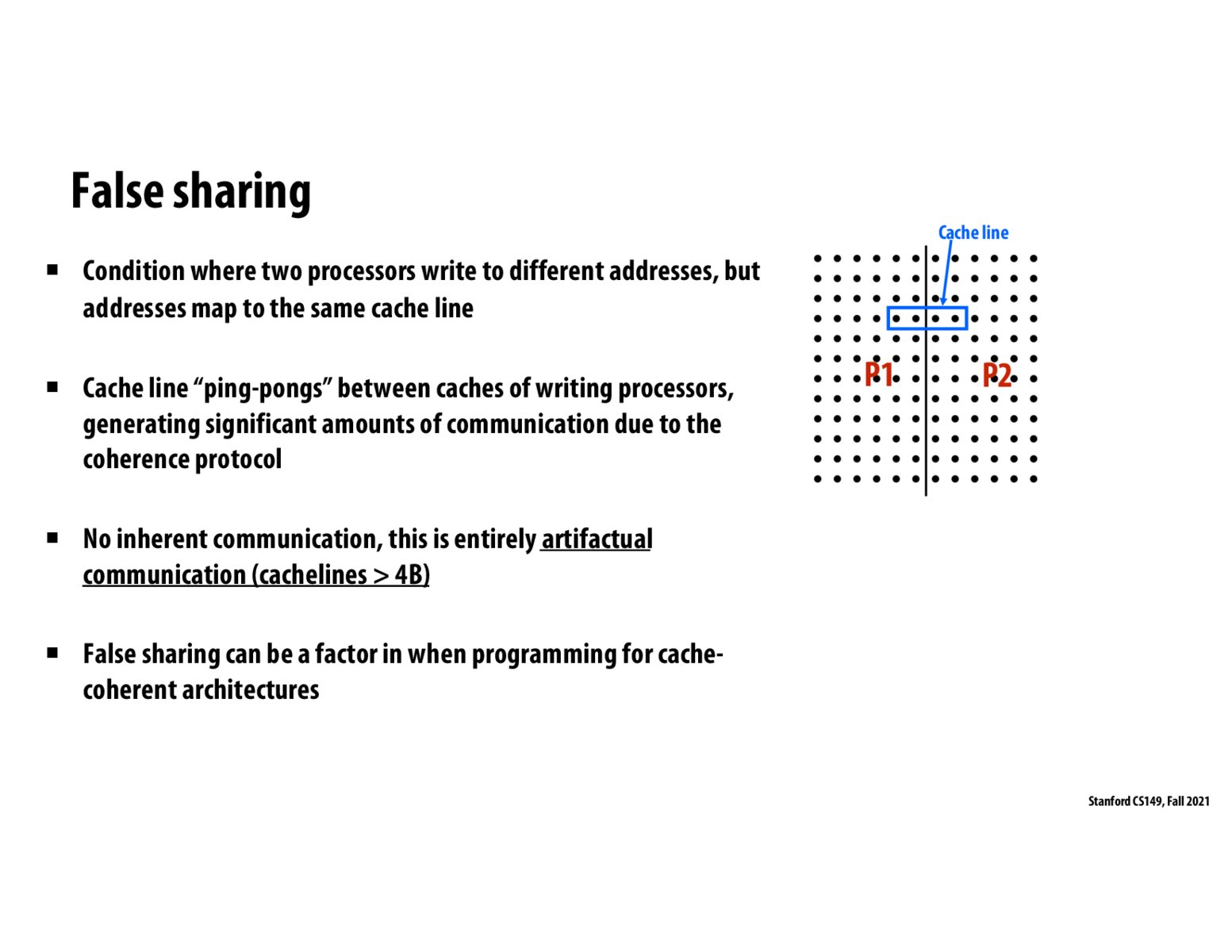

I don't understand why two different address could end up on the same cache line - any examples?

I think this can happen if the cache lines are long and they between different processors. In other words, artifactual communication occurs because of not keeping coherence on individual memory boundaries but keeping it on the basis of cache lines instead

How is false sharing alleviated, if at all? It appears that using smaller cache lines would help since there's less possibility of another wanting the exact same cache line

Why is the cache partitioned into P1 and P2? I thought I had intuitively understood everything (matches Julie's explanation) before Kunle explained it, but after he did, these details of partitioning and the weird cache line positioning between the partitions confused me.

The cache is not partitioned. Remember the address space is broken up into cache line sized pieced of data that are the unit of data transfer to and from memory (DRAM) and the caches.

The illustration here is showing what data element processors 1 and 2 access. Notice that both processors 1 and 2 access different parts of the same cache line.

To check my own understanding, what is the difference between this scenario (two processors write to different addresses, but the addresses map to the same cache line), versus a scenario where we only had one processor that wrote to both of those addresses in an alternating fashion, and the addresses map to the same cache line?

In the latter case, would the single processor just not have to flush the cache line and write to memory as often, this resulting in less communication overhead and more efficiency (fewer cycles per thread to do the computation)?

@kayvonf, yes ok that makes sense - it's more of an upsetting coincidence that P2's data happened to be fetched together with P1's data. If P1's cache line load did not contain P2's data, then performance would be a lot higher because there's no false sharing. Got it.

@thepurplecow, I don't think you would have the scenario where you would be writing to two different addresses that wrote to the same cache line with only 1 processor. This scenario arises because different processors have their own virtual memory address, so these different virtual memory addresses could map to the same cache address. However, let's say for a moment that a processor (P1) has two pieces of data (D1, D2) that exist in the same cache line and it's repeatedly modifying them in an alternating fashion, there's no need to make more bus transactions because... it's the sole writer and sole reader, so there aren't other processors threatening cache consistency here. Even if there were other processors, just that they aren't interfering with this cache line, P1 still wouldn't have to keep issuing BusRdX transactions because only P1 would have this cache line in "M" state upon the first modification call to either D1 or D2.

I might be wrong though, it'd be great if somebody could confirm.

What are the tradeoffs when it comes to deciding the sizes of cache lines? Wouldn't it make sense to make cache lines smaller in order to avoid false sharing?

Please log in to leave a comment.

Is the cache size usually given to the programmer as a constant? Does the compiler watch out for these kind of issues? At what layer of the stack do we avoid this?