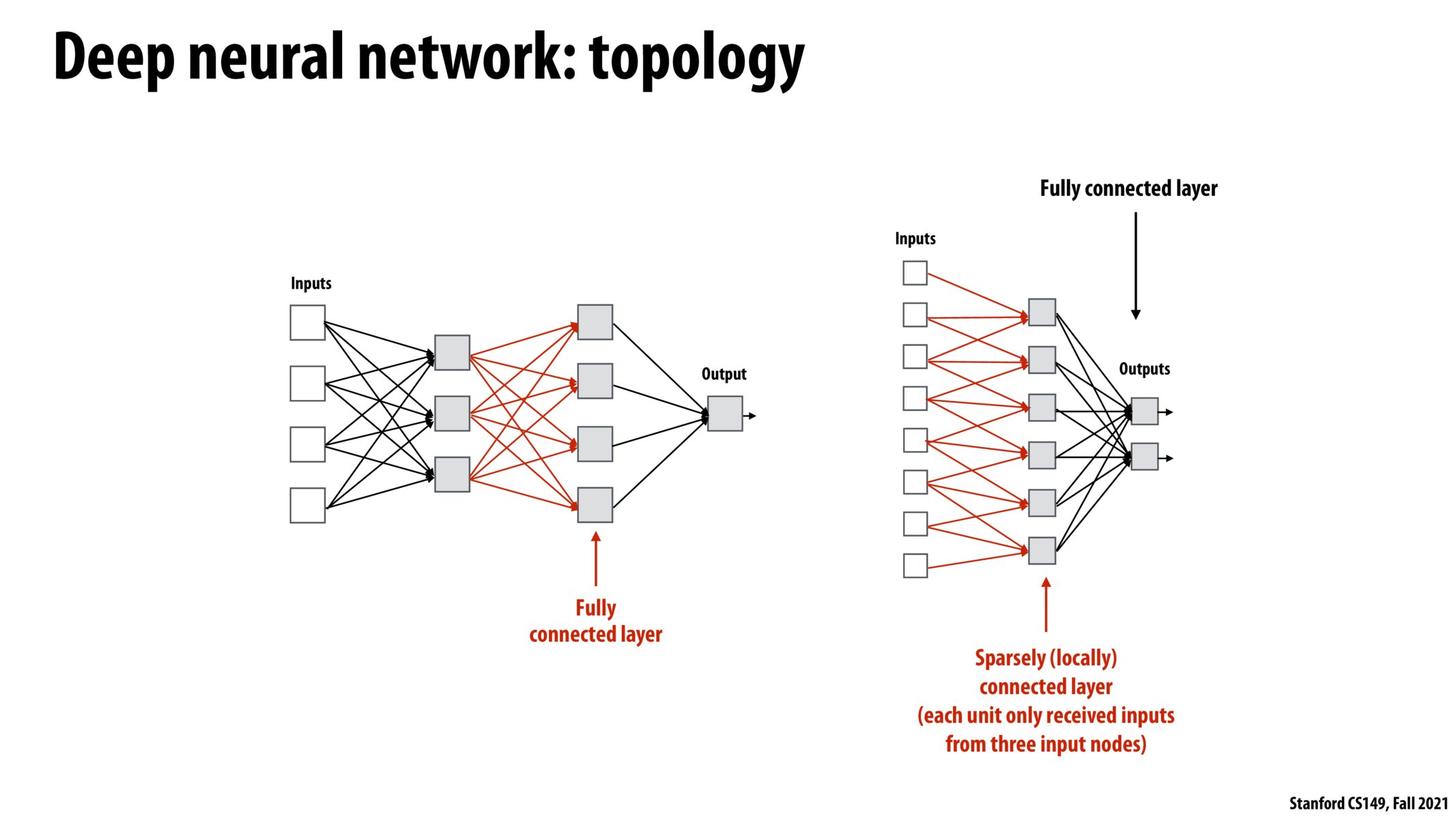

I'm not sure I've heard of any other mainstream classification besides "fully-connected" or "sparsely-connected" -- I think if you don't have every possible connection, it would just be sparse.

Are the steps in these repeated convolutions sequentially applied or can they be run in parallel in any cases?

@tigerpanda In deep learning, a sparsely connected layer often appears as the result of dropout, which is a method that randomly drops out a portion of the connections to make model training more robust. People also re-train a model with sparsely connected layers in order to compress a model (lower the number of parameters). There may be other special use cases when you want to use these sparsely connected layers but they are not common.

Within the same layer you can parallelize the computation but I don't think this is possible across layers as each layer depends on the output of the previous layer.

Adding to @leave's comment, there is a growing interest in sparse neural networks. The Lottery Ticket Hypothesis (https://arxiv.org/abs/1803.03635) is a very interesting paper if you are interested in sparse architectures.

I would imagine there is a significant performance improvement in using sparsely connected layers vs fully connected layers, but are there more accuracy benefits when using fully connected layers?

Please log in to leave a comment.

Just to clarify, is a sparsely connected layer a layer where the layer is not fully connected(aka doesn't have an arrow coming in from every one of the inputs) or is there something in between sparsely connected and and fully connected?